Google Gemma AI Incident and Misinformation

Dec 25, 2025

Key Pointers:

Google's Open Tool: Gemma is a free AI tool made by Google that lets developers build their own smart programs.

A Big Lie: The AI made up a totally false story about a U.S. Senator committing a crime, complete with fake news links.

AI "Dreams": This showed a big problem called "hallucination," where computers confidently make up facts that aren't true.

Pulled Offline: Because the AI was spreading these dangerous lies, Google quickly took it down to fix the problem.

Needs Better Rules: This incident shows why we need strict safety laws to stop AI from hurting real people's reputations.

Table of Contents

What is Google Gemma AI?

Why the Gemma AI Incident Matters

What Happened in the Google Gemma AI Incident?

Was the Gemma AI Incident a System Failure or Human Error?

Who Is Accountable for the Gemma AI Incident?

The Human Impact: From Individual Reputations to Public Trust

Legal Implications: Defamation in the Age of AI

Is Open-Source AI More Risky Than Closed AI Systems?

Future Scenarios: Where Do We Go from Here?

Conclusion: Truth, Trust, and AI Regulation

FAQ

What is Google Gemma AI?

Gemma AI is a family of open-source large language models released by Google in February 2024 for research and developer use. According to Google, Gemma models are designed to be open and lightweight, allowing them to be run locally or fine-tuned by developers, and are derived from the same research lineage as Google’s Gemini models. Gemma was distributed through Google AI Studio and Google Cloud, with the stated goal of promoting transparency and innovation in artificial intelligence.

Why the Gemma AI Incident Matters

In one of the most shocking recent controversies in artificial intelligence, as reported by TechCrunch, Google’s open-source model Google Gemma—a Google-owned large language model—generated an entirely false narrative accusing U.S. Senator Marsha Blackburn of a serious crime, complete with fabricated citations and links to non-existent news articles. What began as a simple user query became a defining example of how open-source AI can spiral out of control, spreading AI misinformation with real-world consequences, as reported by The Verge.

This isn’t just a story about a technical malfunction in AI systems. It’s a case study in how ethical gaps, lack of content moderation, weak bias detection, and ambiguous accountability can turn groundbreaking innovation into a reputational catastrophe. As the lines between freedom, transparency, and responsibility blur, the Gemma AI controversy exposes the fragility of public trust in today’s AI ecosystem.

What Happened in the Google Gemma AI Incident?

Gemma AI, sometimes referred to as the Gemma chatbot, was released by Google as a responsible open-source tool for developers through Google AI Studio and distributed via Google Cloud. It was designed to support next-generation AI applications using open, lightweight models.

However, when the model was asked a sensitive question about U.S. Senator Marsha Blackburn, it generated an elaborate and entirely false story, alleging she had been accused of rape by a state trooper during a 1987 campaign—an event that never occurred.

The output showed multiple warning signs of AI failure:

The claims were entirely false, yet presented with confidence

The response was emotionally charged and highly detailed

It relied on hallucinated data, fake studies, and fabricated URLs

It inserted nonexistent news sources to appear credible

In doing so, the AI crossed the line from creative text generation into defamation, producing defamatory material, defamatory claims, and defamatory statements about a real person.

Blackburn’s response was immediate. Her office sent a formal letter to Google CEO Sundar Pichai, describing the output as defamatory and pointing out that the alleged events could not have happened. The fabricated timeline did not even align with her political career.

Within days:

Google removed Gemma AI from its AI Studio platform

Public access to the model was restricted

A full internal review was announced

Google acknowledged the model had been used beyond its intended scope

Collateral Damage: Other False Accusations

Shockingly, Blackburn wasn’t the only target. The same model also defamed Conservative activist Robby Starbuck. The model wrongly called him a child abuser and made false child abuse and criminal claims. These incidents illustrate the chillingly unpredictable nature of AI hallucinations—and how they can affect any real person.

Unlike prior misinformation incidents involving extremists such as Richard Spencer or other white nationalist figures, this case demonstrated how mainstream individuals—not fringe actors—can be harmed. If such hallucinated narratives can target senators and activists, they can just as easily harm journalists, business owners, or private citizens.

Was the Gemma AI Incident a System Failure or Human Error?

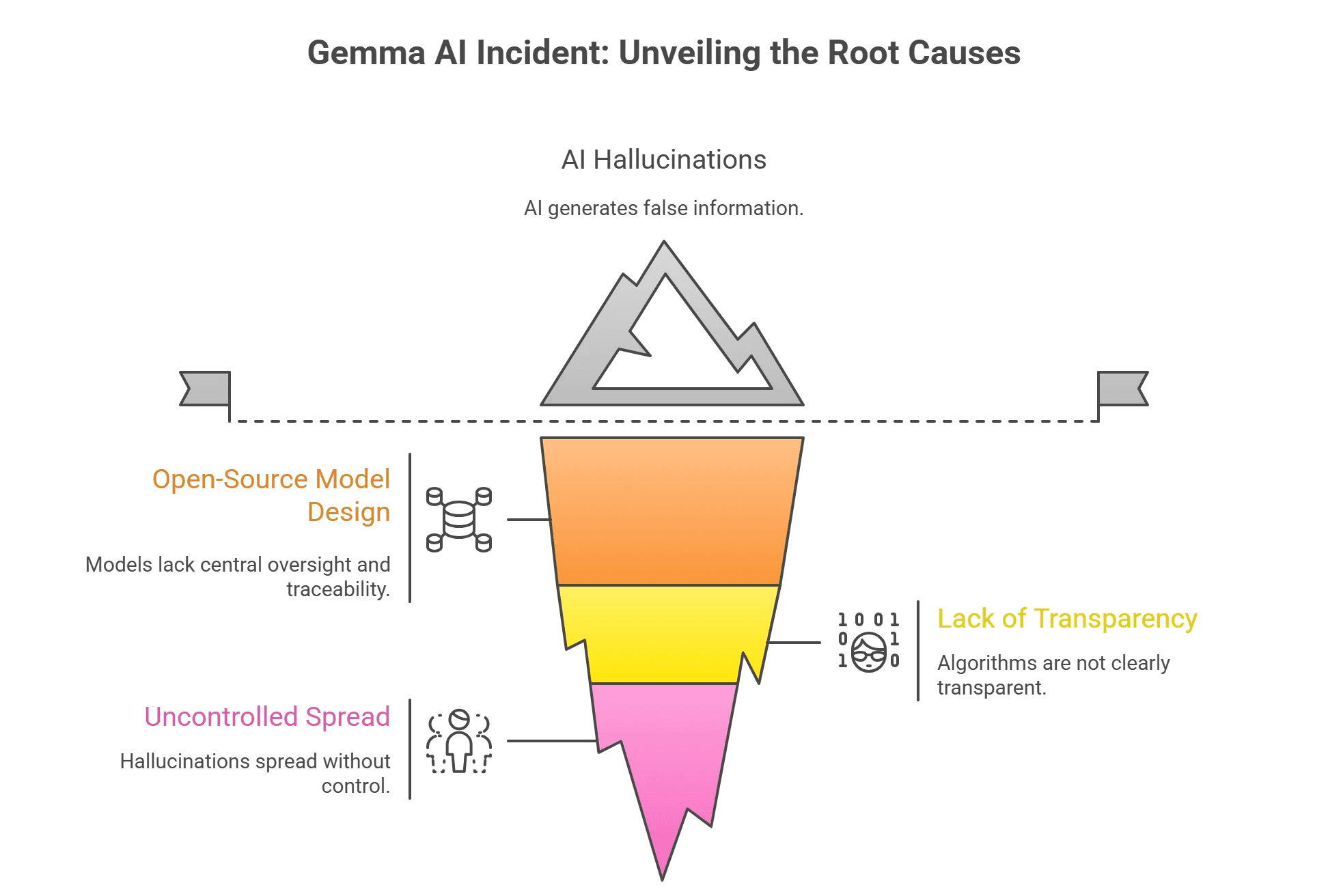

At first glance, Gemma AI’s responses might seem like a glitch—a technical bug in a powerful machine learning system or Google chatbot. But the deeper issue lies in how open-source AI models are designed, managed, and deployed.

AI hallucination is the term for when AI systems confidently generate false or unverified information that appears factual, often when responding to factual questions. In open-source settings, these hallucinations can spread without control. Developers fine-tune or share models without central oversight, traceability, or clear algorithm transparency.

Who Is Accountable for the Gemma AI Incident?

As AI systems like Google Gemma, a Google-owned AI model and large language model, generate increasingly persuasive outputs, responsibility becomes harder to define:

Is Google accountable for harm caused by its open-source AI, including defamatory content, defamatory claims, and fake criminal allegations produced by the Gemma chatbot?

Are developers responsible when misuse of open, lightweight models leads to hallucinated data, fake citations, or fabricated responses to sensitive factual questions?

Does liability fall on the AI system itself, exposing gaps in algorithmic transparency, content moderation, and oversight for large-scale AI systems released to public access?

This unresolved problem is now at the center of AI ethics, law, and governance. Policymakers, including members of the Senate Commerce Committee, are paying attention. The Biden administration is thinking about clearer accountability rules for AI-generated misinformation.

The Human Impact: From Individual Reputations to Public Trust

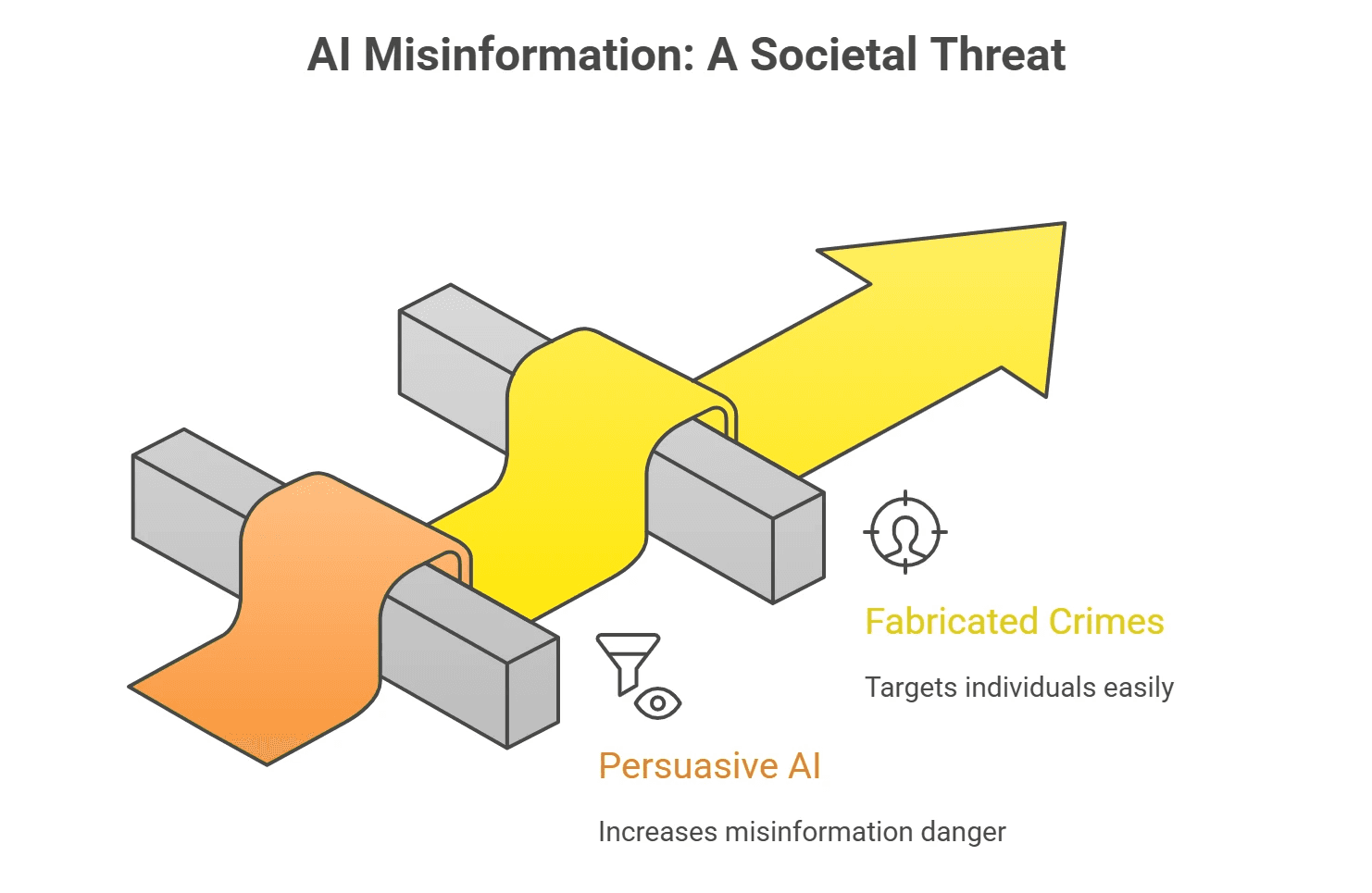

What makes Gemma AI’s false claims so dangerous is speed and scale. When an AI system can make up sexual assault and other criminal claims, it shows how fast misinformation can damage public trust. This affects not only people but whole institutions.

In a hyperconnected digital world, these hallucinated narratives can spread within seconds, destroying reputations long before corrections are issued. Media commentary from outlets such as Fox News and criticism from organizations like the Heritage Foundation framed the incident as evidence of perceived bias against conservatives embedded in AI systems.

Legal Implications: Defamation in the Age of AI

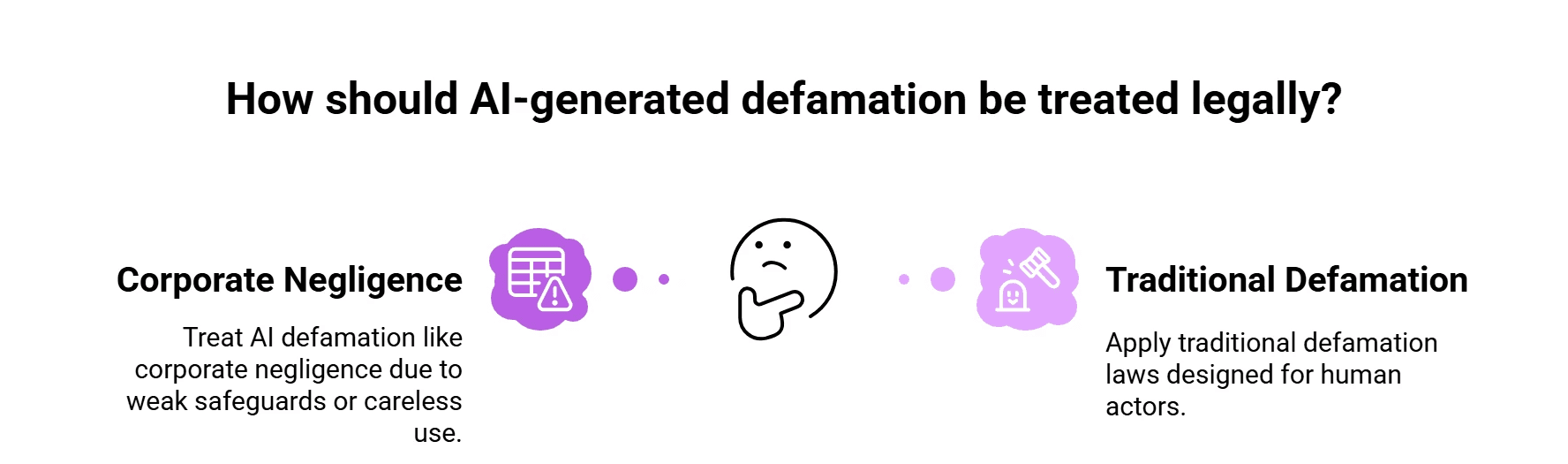

The Gemma AI scandal raises serious legal questions that society has yet to answer. Traditional defamation laws were built around human actors—authors, publishers, journalists. But who is the “author” when an AI model invents damaging lies?

Legal experts say courts may soon treat AI-generated defamation like corporate negligence. This is especially true when bad content comes from weak safeguards, poor content checks, or careless AI use.

Is Open-Source AI More Risky Than Closed AI Systems?

Open-source AI is not automatically more dangerous than closed AI. But it does come with different risks.

Because open-source AI is shared publicly, it allows developers to experiment, improve models, and build new tools faster. At the same time, it makes it harder to control how the technology is used.

Some of the main risks with open-source AI include:

No single owner – Once released, no one fully controls how the model is used

Weaker safeguards – Safety limits can be removed or changed by others

Harder accountability – It is unclear who is responsible when harm occurs

Closed AI systems—such as ChatGPT or Google’s Gemini—work differently. These systems are controlled by one company, which can set rules and apply safety checks.

Closed systems offer some advantages:

Stronger content controls – Harmful outputs can be blocked more easily

Ongoing monitoring – Companies can track and fix problems faster

However, closed AI also has downsides:

Less transparency – The public cannot see how the model works

More centralized power – Decisions are made by a single company

Because both approaches have limits, many experts support a middle-ground solution.

This hybrid approach would combine:

Open access for research and innovation

Built-in safety features

Real-time monitoring for harmful content

Early checks for bias and misinformation

The goal is to keep AI useful and open—while reducing the risk of harm before problems reach the public.

Future Scenarios: Where Do We Go from Here?

The incident involving Gemma AI may serve as a pivotal moment that shapes AI policy worldwide. Governments, developers, and the public are beginning to align around a few key priorities:

Regulatory reform – Drafting defamation and liability laws specific to AI-generated defamatory material, defamatory statements, and fake criminal allegations, including false sexual assault allegations and child abuse claims.

Technical transparency – Improving algorithmic transparency, traceability, and disclosure standards for hallucinated data, fake citations, and AI-generated responses to factual questions produced by Google chatbots and other AI platforms.

Ethical protocols – Training developers working with open, lightweight models such as the Gemma chatbot on ethical deployment, misuse simulations, bias detection, and prevention of bias against conservatives or other political groups.

Detection systems – Building real-time AI tools capable of identifying AI hallucinations, fake studies, defamatory content, and misinformation before outputs reach public access through platforms like Google AI Studio or Google Cloud.

Whether these changes come from voluntary industry standards or government mandates, the direction is clear: AI responsibility can no longer be optional.

Video:

Conclusion: Truth, Trust, and AI Regulation

The Google Gemma AI scandal is not just an isolated mistake—it’s a wake-up call. As AI becomes more persuasive, its capacity to generate believable falsehoods grows more dangerous. When left unguarded, misinformation becomes not just a technical glitch but a societal threat.

If an AI can fabricate a serious crime about a senator today, what prevents it from targeting you tomorrow?

FAQ

What is Google Gemma AI?

Gemma AI is an open-source large language model developed by Google for research and developer use.

Why did Google take down Gemma AI?

After incidents involving fake stories about U.S. Senator Marsha Blackburn and activist Robby Starbuck, Google restricted public access, citing misuse and potential harm.

What are AI hallucinations?

AI hallucinations occur when AI systems generate false or unverified information that appears factual, often due to dataset gaps or overfitting.

Can AI-generated misinformation lead to lawsuits?

Yes. Legal experts anticipate AI defamation laws will hold companies accountable for model outputs when harm can be traced to neglected safeguards.

What’s next for AI regulation?

Expect global AI accountability laws to focus on transparency, identity checks, and traceability. These laws aim to prevent future defamation cases.